frame_000000_warped_to_000029.png warps frame_000000 to frame_000029 by scaled camera translations and disparity maps from initial monocular depth estimation.The interesting thing about monitors is that they should be like the Sun. Vis_calibration_dense/ # for debugging scale calibration. Scales.csv # frame indices and corresponding scales between initial monocular disparity estimation and COLMAP dense disparity maps.ĭepth_scaled_by_colmap_dense/ # monocular disparity estimation scaled to match COLMAP disparity results It is the camera parameters used in the test-time training process. Metadata_scaled.npz # camera intrinsics and extrinsics after scale calibration. raw formatĭepth_$/ # initial disparity estimation using the original monocular depth model before test-time trainingįlow_list_0.20.json # indices of frame pairs passing overlap ratio test of threshold 0.2.

Metadata.npz # camera intrinsics and extrinsics converted from COLMAP sparse reconstruction.ĭepth_colmap_dense/ # COLMAP dense depth maps converted to disparity maps in.

Vis_flow_warped/ # visualzing flow accuracy by warping one frame to another using the estimated flow. Mask/ # mask of consistent flow estimation between frame pairs.

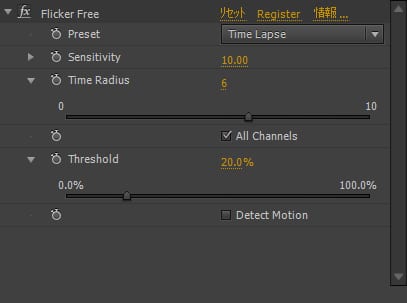

#Flicker free full#

We typically calibrate the camera by capturing a video of a textured plane with really slow camera motion while trying to let target featuresĬover the full field of view, selecting non-blurry frames, running COLMAP on these images.įrames.txt # meta data about number of frames, image resolution and timestamps for each frameĬolor_full/ # extracted frames in the original resolutionĬolor_down/ # extracted frames in the resolution for disparity estimationĬolor_flow/ # extracted frames in the resolution for flow estimationįlow_list.json # indices of frame pairs to finetune the model with Calibrate camera using PINHOLE (fx, fy, cx, cy) or SIMPLE_PINHOLE (f, cx, cy) model.Ĭamera intrinsics calibration is optional but suggested for more accurate and faster camera registration.Place your video file at $video_file_path.Here I demonstrate some examples for common usage of the system. Please refer to params.py or run python main.py -help for the full list of parameters. To enable testing the COLMAP part, you can delete results/ayush/colmap_dense and results/ayush/depth_colmap_dense.Īnd then run the python command above again. except the COLMAP part for quick demonstration and ease of installation. The demo runs everything including flow estimation, test-time training, etc. Your results can be different due to randomness in the test-time training process. The improved quality of the reconstruction enables several applications, such as scene reconstruction and advanced video-based visual effects.Ĭolor_depth_mc_depth_colmap_dense_B0.1_R1.0_PL1-0_LR0.0004_BS4_Oadam.mp4 # comparison of disparity maps from mannequin challenge, COLMAP and oursĮval/ # disparity maps and losses after each epoch of training Our algorithm is able to handle challenging hand-held captured input videos with a moderate degree of dynamic motion.

Visually, our results appear more stable. We show through quantitative validation that our method achieves higher accuracy and a higher degree of geometric consistency than previous monocular reconstruction methods. At test time, we fine-tune this network to satisfy the geometric constraints of a particular input video, while retaining its ability to synthesize plausible depth details in parts of the video that are less constrained. Unlike the ad-hoc priors in classical reconstruction, we use a learning-based prior, i.e., a convolutional neural network trained for single-image depth estimation. We leverage a conventional structure-from-motion reconstruction to establish geometric constraints on pixels in the video. We present an algorithm for reconstructing dense, geometrically consistent depth for all pixels in a monocular video.

0 kommentar(er)

0 kommentar(er)